Zhe’s PhD Defense - Toward Out-Of-Distribution Generalization Of Deep Learning Models

Ph.D. Dissertation Defense by Zhe Wang, Tues., 04/02/24, at 12:00PM (ET) Committee:

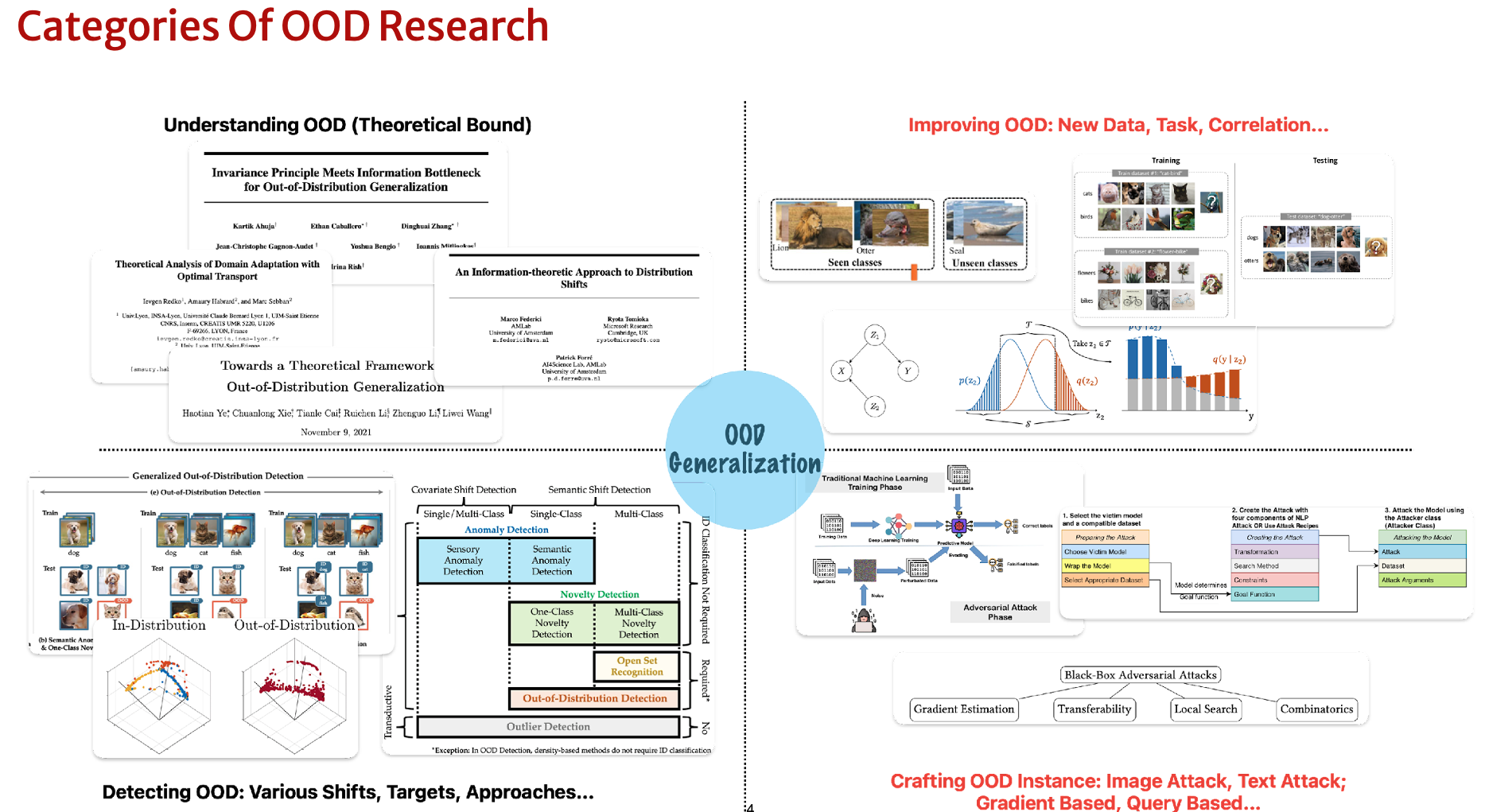

Generalization refers to how a machine model adapts properly to new, previously unseen data. We focus on OOD (out of distribution) generalization.

Deep learning constructs networks of parameterized functional modules and is trained from reference examples using gradient-based optimization [Lecun19].

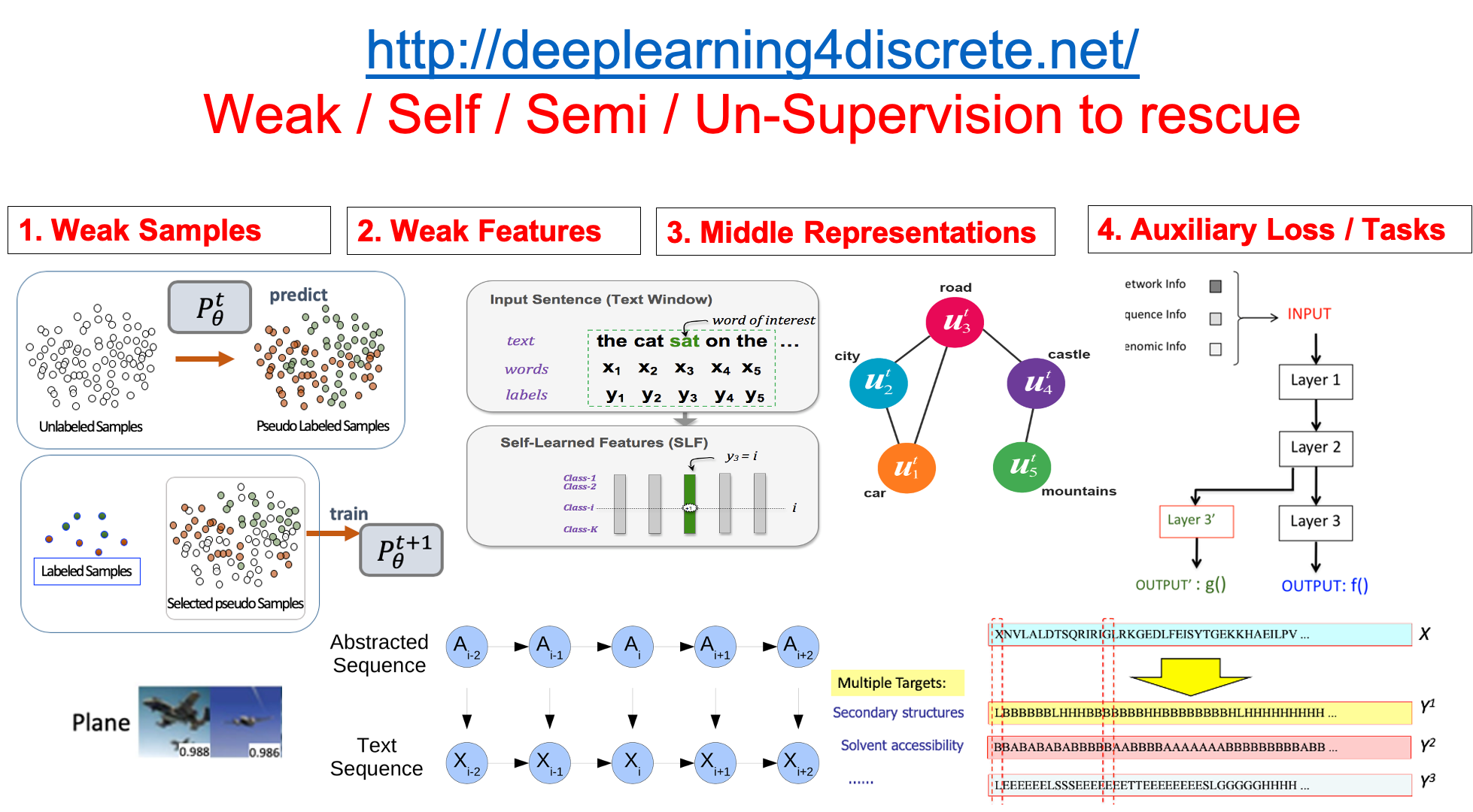

Since it is hard to estimate gradients through functions of discrete random variables, researching on how to make deep learning behave well on discrete structured data and structured representation interests us. Developing such techniques are an active research area. We focus on investigating interpretable and scalable techniques for doing so.

Have questions or suggestions? Feel free to ask me on Twitter or email me.

Thanks for reading!

Ph.D. Dissertation Defense by Zhe Wang, Tues., 04/02/24, at 12:00PM (ET) Committee:

Arshdeep Sekhon’s PhD Defense June 29, 2022.

Ph.D. Dissertation Defense by Jack Lanchantin Tuesday, July 20th, 2021 at 2:00 PM (ET), via Zoom. Committee: Vicente Ordóñez Román, Committee Chair, ...

Title: General Multi-label Image Classification with Transformers

Title: Curriculum Labeling- Self-paced Pseudo-Labeling for Semi-Supervised Learning”

Title: Searching for a Search Method: Benchmarking Search Algorithms for Generating NLP Adversarial Examples

Title: Reevaluating Adversarial Examples in Natural Language

PhD Defense Presentation by Beilun Wang Friday, July 20, 2018 at 9:00 am in Rice 242 Committee Members: Mohammad Mahmoody (Chair), Yanjun Qi (Advisor), ...

Tool MUST-CNN: A Multilayer Shift-and-Stitch Deep Convolutional Architecture for Sequence-based Protein Structure Prediction

Title: Deep Learning for Character-based Information Extraction on Chinese and Protein Sequence

Tool Multitask-ProteinTagging: A unified multitask architecture for predicting local protein properties

Paper0: Learning to rank with (a lot of) word features