EMNLP - TextAttack- A Framework for Adversarial Attacks in Natural Language Processing

Title: TextAttack: A Framework for Adversarial Attacks in Natural Language Processing

GitHub: https://github.com/QData/TextAttack

Paper Arxiv

Abstract

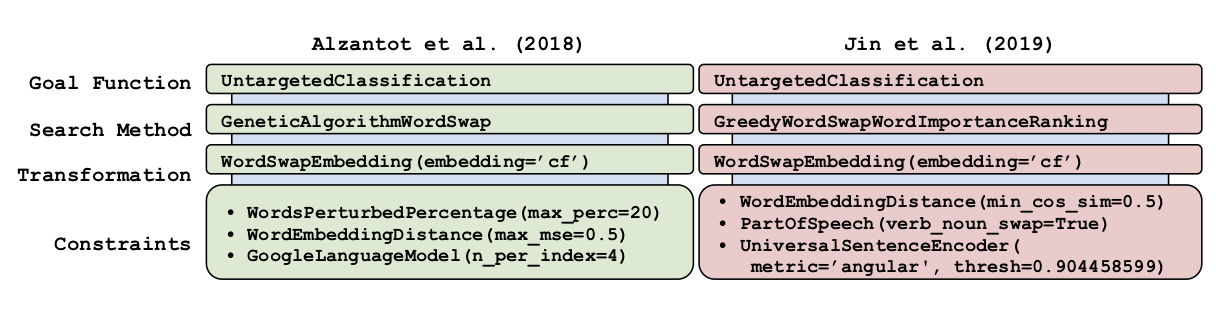

TextAttack is a library for generating natural language adversarial examples to fool natural language processing (NLP) models. TextAttack builds attacks from four components: a search method, goal function, transformation, and a set of constraints. Researchers can use these components to easily assemble new attacks. Individual components can be isolated and compared for easier ablation studies. TextAttack currently supports attacks on models trained for text classification and entailment across a variety of datasets. Additionally, TextAttack’s modular design makes it easily extensible to new NLP tasks, models, and attack strategies. TextAttack code and tutorials are available at this https URL.

It is a Python framework for adversarial attacks, data augmentation, and model training in NLP.

Citations

@misc{morris2020textattack,

title={TextAttack: A Framework for Adversarial Attacks in Natural Language Processing},

author={John X. Morris and Eli Lifland and Jin Yong Yoo and Yanjun Qi},

year={2020},

eprint={2005.05909},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

Support or Contact

Having trouble with our tools? Please contact Dr.Qi and we’ll help you sort it out.