JointNets R package for Joint Network Estimation, Visualization, Simulation and Evaluation from Heterogeneous Samples

18 Jul 2019jointNets R package: a Suite of Fast and Scalable Tools for Learning Multiple Sparse Gaussian Graphical Models from Heterogeneous Data with Additional Knowledge

JointNets R in CRAN : URL

Github Site: URL

Talk slide by Zhaoyang about the jointnet implementations:

Demo GUI Run:

Demo Visualization of a few learned networks:

- DIFFEE on one gene expression dataset about breast cancer

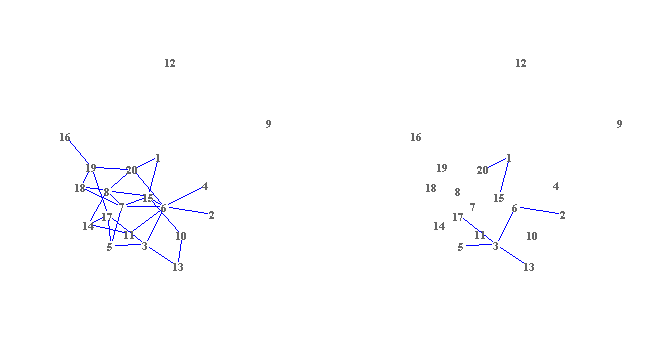

- JEEK on one simulated data about samples from multiple contexts and nodes with extra spatial information

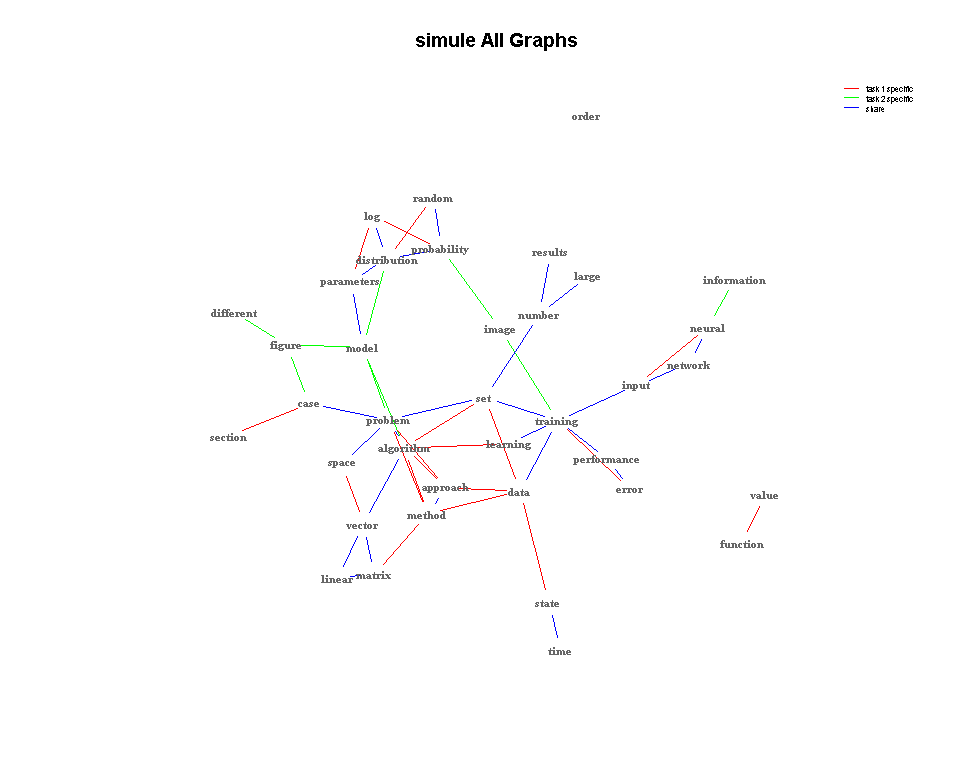

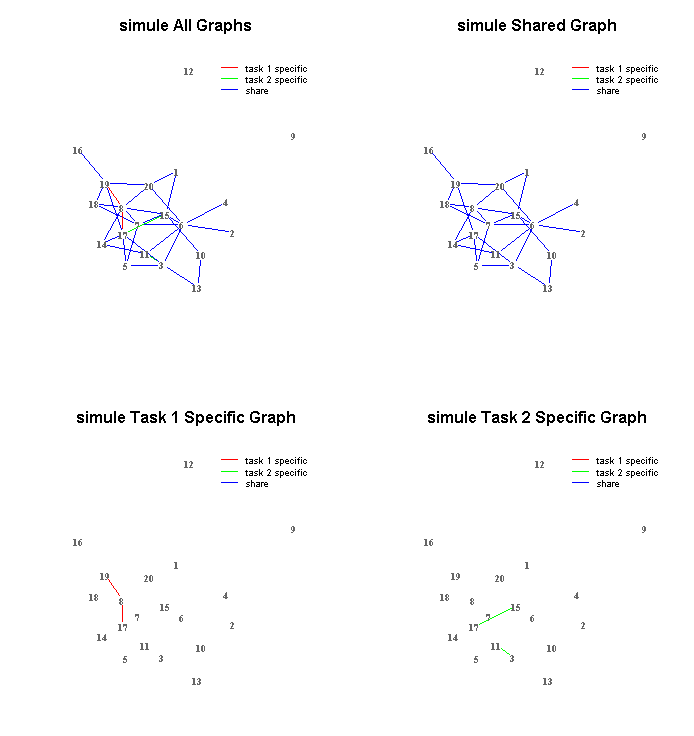

- SIMULE on one word based text dataset including multiple categories

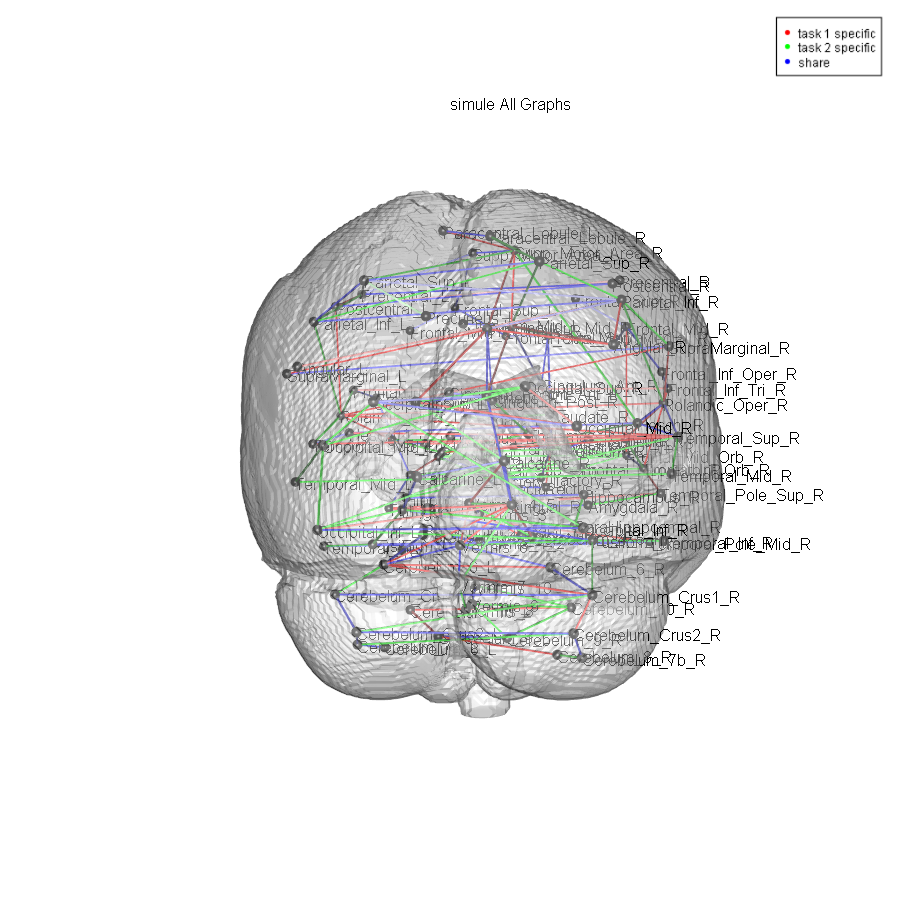

- SIMULE on one multi-context Brain fMRI dataset

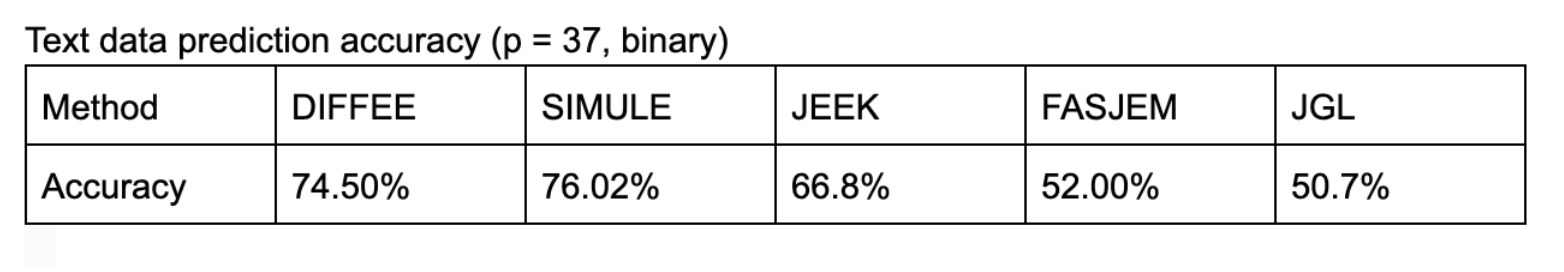

- Demo downstream task using learned graphs for classification, e.g., on a two class text dataset, we get

- With Zoom In/Out function

- With Multiple window design, legend, title coloring schemes

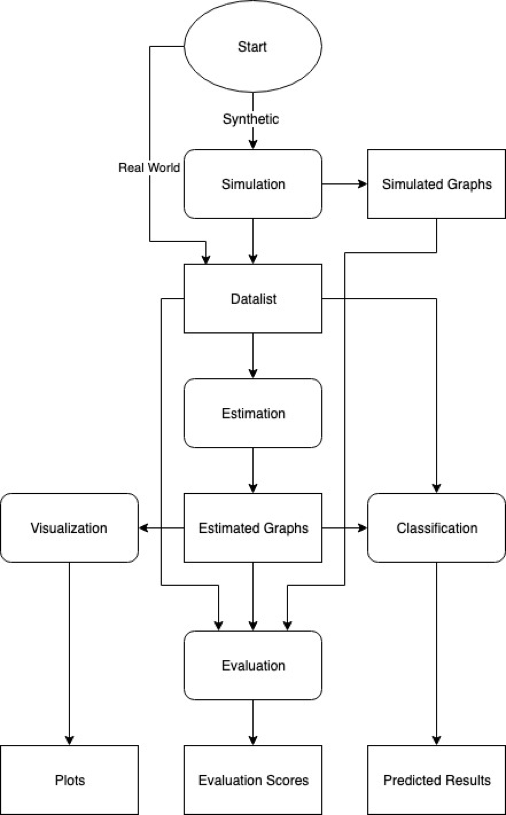

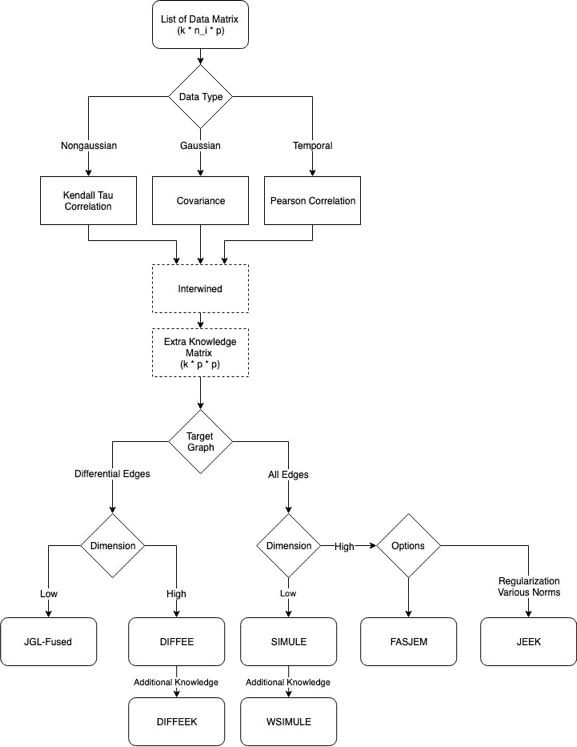

Flow charts of the code design (functional and module level) in jointnets package

Citations

@conference{wang2018jeek,

Author = {Wang, Beilun and Sekhon, Arshdeep and Qi, Yanjun},

Booktitle = {Proceedings of The 35th International Conference on Machine Learning (ICML)},

Title = {A Fast and Scalable Joint Estimator for Integrating Additional Knowledge in Learning Multiple Related Sparse Gaussian Graphical Models},

Year = {2018}}

}

Support or Contact

Having trouble with our tools? Please contact Arsh and we’ll help you sort it out.