DiffGraph (Index of Posts):

18 Jun 2019

Abstract

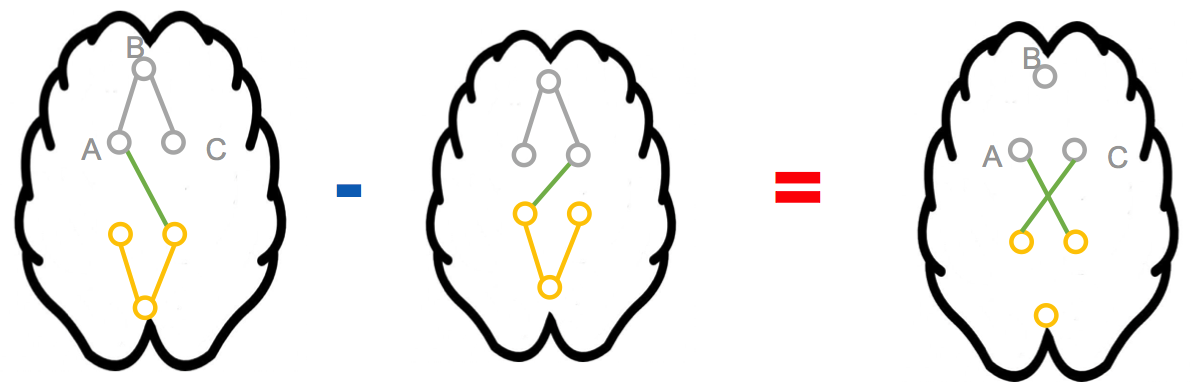

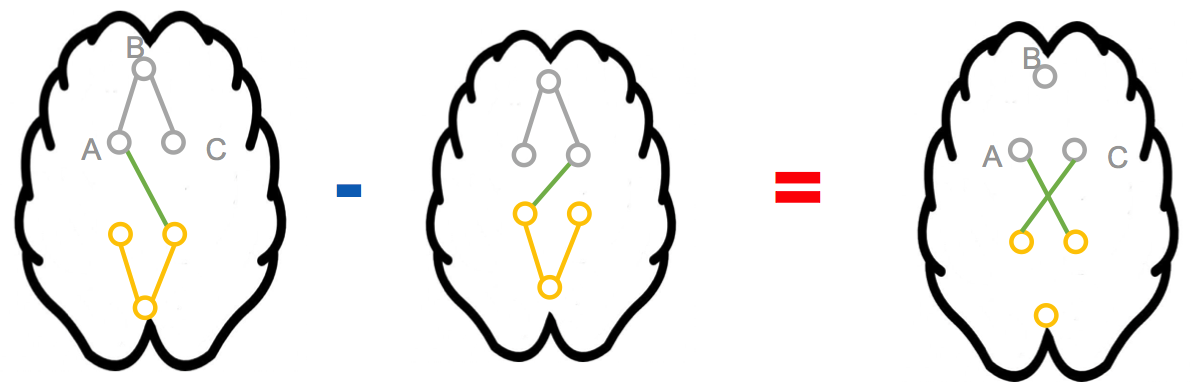

We focus on integrating different types of extra knowledge (other than the observed samples) for estimating the sparse structure change between two p-dimensional Gaussian Graphical Models (i.e. differential GGMs). Previous differential GGM estimators either fail to include additional knowledge or cannot scale up to a high-dimensional (large p) situation. This paper proposes a novel method KDiffNet that incorporates Additional Knowledge in identifying Differential Networks via an Elementary Estimator. We design a novel hybrid norm as a superposition of two structured norms guided by the extra edge information and the additional node group knowledge. KDiffNet is solved through a fast parallel proximal algorithm, enabling it to work in large-scale settings. KDiffNet can incorporate various combinations of existing knowledge without re-designing the optimization. Through rigorous statistical analysis we show that, while considering more evidence, KDiffNet achieves the same convergence rate as the state-of-the-art. Empirically on multiple synthetic datasets and one real-world fMRI brain data, KDiffNet significantly outperforms the cutting edge baselines concerning the prediction performance, while achieving the same level of time cost or less.

Citations

@conference{arsh19kdiffNet,

Author = {Sekhon, Arshdeep and Wang, Beilun and Qi, Yanjun},

Title = {Adding Extra Knowledge in Scalable Learning of

Sparse Differential Gaussian Graphical Models},

Year = {2019}}

}

Having trouble with our tools? Please contact Arsh and we’ll help you sort it out.

01 Mar 2019

Title: Neural Message Passing for Multi-Label Classification

Abstract

Multi-label classification (MLC) is the task of assigning a set of target labels for a given sample. Modeling the combinatorial label interactions in MLC has been a long-haul challenge. Recurrent neural network (RNN) based encoder-decoder models have shown state-of-the-art performance for solving MLC. However, the sequential nature of modeling label dependencies through an RNN limits its ability in parallel computation, predicting dense labels, and providing interpretable results. In this paper, we propose Message Passing Encoder-Decoder (MPED) Networks, aiming to provide fast, accurate, and interpretable MLC. MPED networks model the joint prediction of labels by replacing all RNNs in the encoder-decoder architecture with message passing mechanisms and dispense with autoregressive inference entirely. The proposed models are simple, fast, accurate, interpretable, and structure-agnostic (can be used on known or unknown structured data). Experiments on seven real-world MLC datasets show the proposed models outperform autoregressive RNN models across five different metrics with a significant speedup during training and testing time.

Citations

@article{lanchantin2018neural,

title={Neural Message Passing for Multi-Label Classification},

author={Lanchantin, Jack and Sekhon, Arshdeep and Qi, Yanjun},

year={2018}

}

Having trouble with our tools? Please contact Jack Lanchantin and we’ll help you sort it out.

08 Nov 2017

Presentation: Slides @ AISTAT18

Poster @ NIPS 2017 workshop for Advances in Modeling and Learning Interactions from Complex Data.

R package: CRAN

install.packages("diffee")

library(diffee)

demo(diffee)

Abstract

We focus on the problem of estimating the change in the dependency structures of two p-dimensional Gaussian Graphical models (GGMs). Previous studies for sparse change estimation in GGMs involve expensive and difficult non-smooth optimization. We propose a novel method, DIFFEE for estimating DIFFerential networks via an Elementary Estimator under a high-dimensional situation. DIFFEE is solved through a faster and closed form solution that enables it to work in large-scale settings. We conduct a rigorous statistical analysis showing that surprisingly DIFFEE achieves the same asymptotic convergence rates as the state-of-the-art estimators that are much more difficult to compute. Our experimental results on multiple synthetic datasets and one real-world data about brain connectivity show strong performance improvements over baselines, as well as significant computational benefits.

Citations

@InProceedings{pmlr-v84-wang18f,

title = {Fast and Scalable Learning of Sparse Changes in High-Dimensional Gaussian Graphical Model Structure},

author = {Beilun Wang and arshdeep Sekhon and Yanjun Qi},

booktitle = {Proceedings of the Twenty-First International Conference on Artificial Intelligence and Statistics},

pages = {1691--1700},

year = {2018},

editor = {Amos Storkey and Fernando Perez-Cruz},

volume = {84},

series = {Proceedings of Machine Learning Research},

address = {Playa Blanca, Lanzarote, Canary Islands},

month = {09--11 Apr},

publisher = {PMLR},

pdf = {http://proceedings.mlr.press/v84/wang18f/wang18f.pdf},

url = {http://proceedings.mlr.press/v84/wang18f.html},

abstract = {We focus on the problem of estimating the change in the dependency structures of two $p$-dimensional Gaussian Graphical models (GGMs). Previous studies for sparse change estimation in GGMs involve expensive and difficult non-smooth optimization. We propose a novel method, DIFFEE for estimating DIFFerential networks via an Elementary Estimator under a high-dimensional situation. DIFFEE is solved through a faster and closed form solution that enables it to work in large-scale settings. We conduct a rigorous statistical analysis showing that surprisingly DIFFEE achieves the same asymptotic convergence rates as the state-of-the-art estimators that are much more difficult to compute. Our experimental results on multiple synthetic datasets and one real-world data about brain connectivity show strong performance improvements over baselines, as well as significant computational benefits.}

}

Having trouble with our tools? Please contact Beilun and we’ll help you sort it out.