Learning the Dependency Structure of Latent Factors

20 Jun 2014Paper: @NeurIPS12

- Yunlong He, Yanjun Qi, Koray Kavukcuoglu, Haesun Park

GitHub

Poster PDF

Abstract:

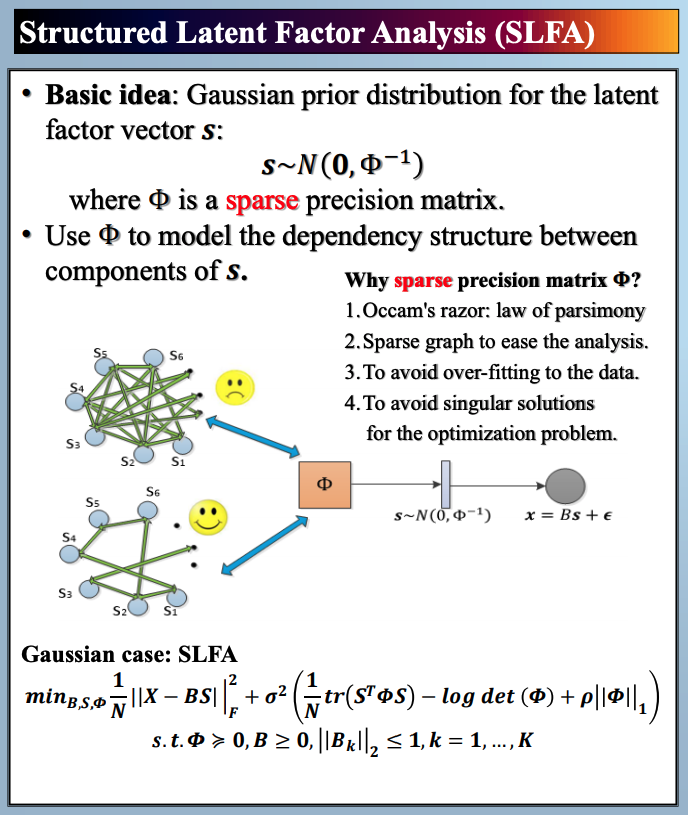

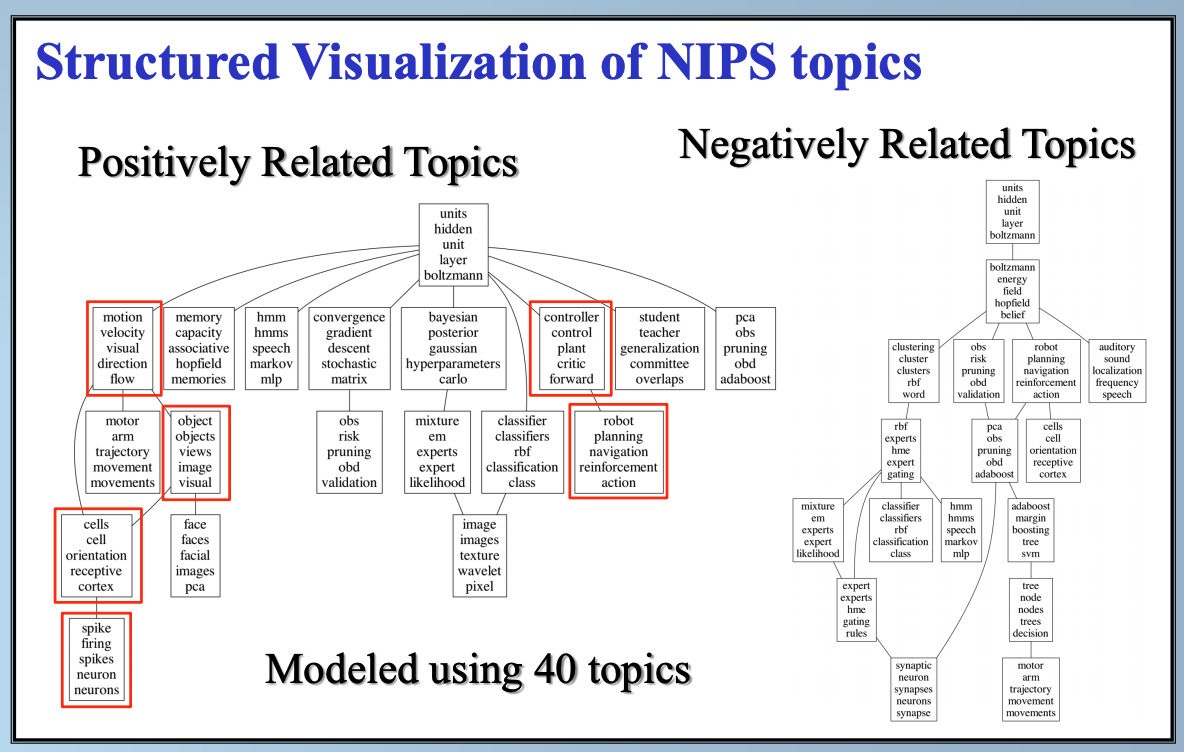

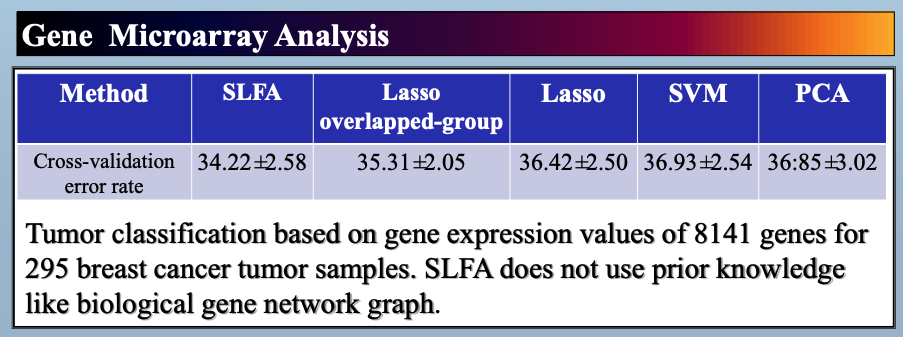

In this paper, we study latent factor models with the dependency structure in the latent space. We propose a general learning framework which induces sparsity on the undirected graphical model imposed on the vector of latent factors. A novel latent factor model SLFA is then proposed as a matrix factorization problem with a special regularization term that encourages collaborative reconstruction. The main benefit (novelty) of the model is that we can simultaneously learn the lower-dimensional representation for data and model the pairwise relationships between latent factors explicitly. An on-line learning algorithm is devised to make the model feasible for large-scale learning problems. Experimental results on two synthetic data and two real-world data sets demonstrate that pairwise relationships and latent factors learned by our model provide a more structured way of exploring high-dimensional data, and the learned representations achieve the state-of-the-art classification performance.

Citations

@inproceedings{he2012learning,

title={Learning the dependency structure of latent factors},

author={He, Yunlong and Qi, Yanjun and Kavukcuoglu, Koray and Park, Haesun},

booktitle={Advances in neural information processing systems},

pages={2366--2374},

year={2012}

}

Support or Contact

Having trouble with our tools? Please contact Yanjun Qi and we’ll help you sort it out.